While working on a recent project to replace a low code platform with the Power Platform, one of the requirements that we had was to replace some of the Excel export and import functionalities that the existing system had to be able to send large Excel files into various other systems to be analyzed and consumed.

Before I start going into the details here, let’s get a quick understanding of the business requirements. We needed to be able to query the data from Dataverse from multiple data sources and multiple levels, so that we could retrieve the correct data sets. We also needed to export the data from Dataverse into Microsoft Excel files that contains a vast number of records, which using the basic Excel functionality would be a massive performance issue. Lastly we needed to do some data comparisons and update data back into Dataverse after comparing tens of thousands of records.

So, let’s start and looking at what needed to be done here step by step. The first thing that I mentioned, is querying data across multiple tables and multiple data sources. Imagine that you had to write a nested query or fetch xml getting data from multiple tables nested multiple levels with multiple types of relationships from within Dataverse directly. Seems complicated and in some scenarios would be almost impossible. Let’s think in a matter of SQL. How hard would it be to write the same requirements using an SQL Query. Much easier I would say, and definitely possible. TDS is an option, but not realistic when you have to do certain things dynamically and with no access to SQL Server itself.

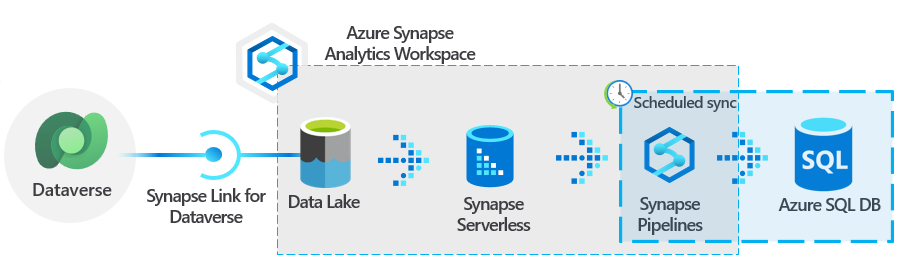

An even better option is using Azure Synapse Link for Dataverse with Azure Data Lake. Azure Synapse Link, formerly known as Export to data lake provides the ability to get a copy of the data that you have in Dataverse and put it in a Gen2 Azure Data Lake Storage. From there you can move the data to Azure Synapse Analytics or even a separate SQL Server. Either option will allow you to query your data directly using SQL Queries, but also create Azure Data Factory (ADF) pipelines that can be scheduled to run at particular intervals or executing an ADF pipeline using the Azure Data Factory connector that is available from Logic Apps, Power Apps and Power Automate.

The results of the process from ADF can be stored in a SQL Server, separate table or any other source that would be easy to consume that data from Power Automate or Logic Apps. There are many different ways to write the data to Excel, but these have to be done outside of ADF, as ADF currently does not support a Sink for Exporting to Excel.

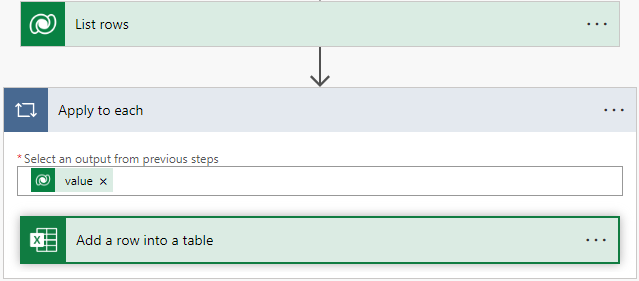

Now that we have resolved the issue of getting the data that we need for the export, let’s take a look at how we export it directly to Excel. Whether I store the data back into a separate Dataverse table or in a SQL Server table somewhere, I need to read the Data using the SQL Server connector or the Dataverse connector using List rows. Let’s imagine that I have 20,000 rows to Export, how long do you think it would take to run the following logic?

This would probably take hours, and I believe it would probably crash somewhere in the middle. Don’t think this would be the right choice. We can always do a csv file, but that might not be the format that the business is looking for. So what is the alternative? The Microsoft Excel (Business) connector has two actions that can be used:

- Run script

- Run script from SharePoint library (currently in preview)

Both of these actions allow us to have a script that will populate the data in Microsoft Excel file based on a script file that we can create. The creation of the script file might be a little more cumbersome than using the Add a row in a table action but from a performance standpoint this will run a lot faster and will allow the file to be created according to requirements. You can see some of these examples using Power Automate on the Microsoft learn web site or you can learn more about the Fundamentals for Office Scripts in Excel here.

Please note that there are limitations with using Office Scripts such as the size of requests and responses, limit of the number of Run script actions per day, the range limitations and the maximum size of parameters that are passed to the Run script action (30M bytes). All of these can be dealt with by making sure that your Power Automate or logic app process is configured correctly.

If you need to call the Run Script more than 1600 calls per day, you should consider using different service accounts in different environments as that limit is per user, and if needed separate the logic to run by different users as needed as well. If your parameter sizes will be larger that the 30M bytes, than you will need to split them into multiple batches and make sure your requests and responses are small than 5MB in size. Take a look at the Platform limits and requirements with Office Scripts page on Microsoft Learn.

There are a lot of scenarios where this can be helpful, but just because we are using a low code platform does not mean that extending it and using some more complex logic and scenarios will not help us achieve the results.